Akarsh Kumar

[Twitter] [Google Scholar] [GitHub]

Contact

akarshkumar0101(at)gmail(dot)com

Bio

I'm a Ph.D. student at MIT CSAIL working with Phillip Isola.

I'm also a research intern at Sakana AI working with Yujin Tang and David Ha.

I collaborate with Ken Stanley, Jeff Clune, and Joel Lehman.

My research is supported by the NSF GRFP!

Previously, I graduated from UT Austin where I worked with Peter Stone, Risto Miikkulainen, and Alexander Huth.

Research

I want to understand intelligence and all the different processes that emerge it.

More specifically, I'm interested in:

- Applying principles from natural evolution and artificial life to create better AI.

- Open-ended processes which keep complexifying and creating "interesting" artifacts indefinitely.

- Emergent intelligence from scratch, without internet data, like how natural evolution created us.

Research

(DRQ) Digital Red Queen: Adversarial Program Evolution in Core War with LLMs

arXiv 2026

TLDR: Core War is a game where assembly programs fight against each other for control of a Turing-complete virtual machine. We use LLMs to evolve these programs in a adversarial arms race. We found evidence of convergent evolution across different runs.

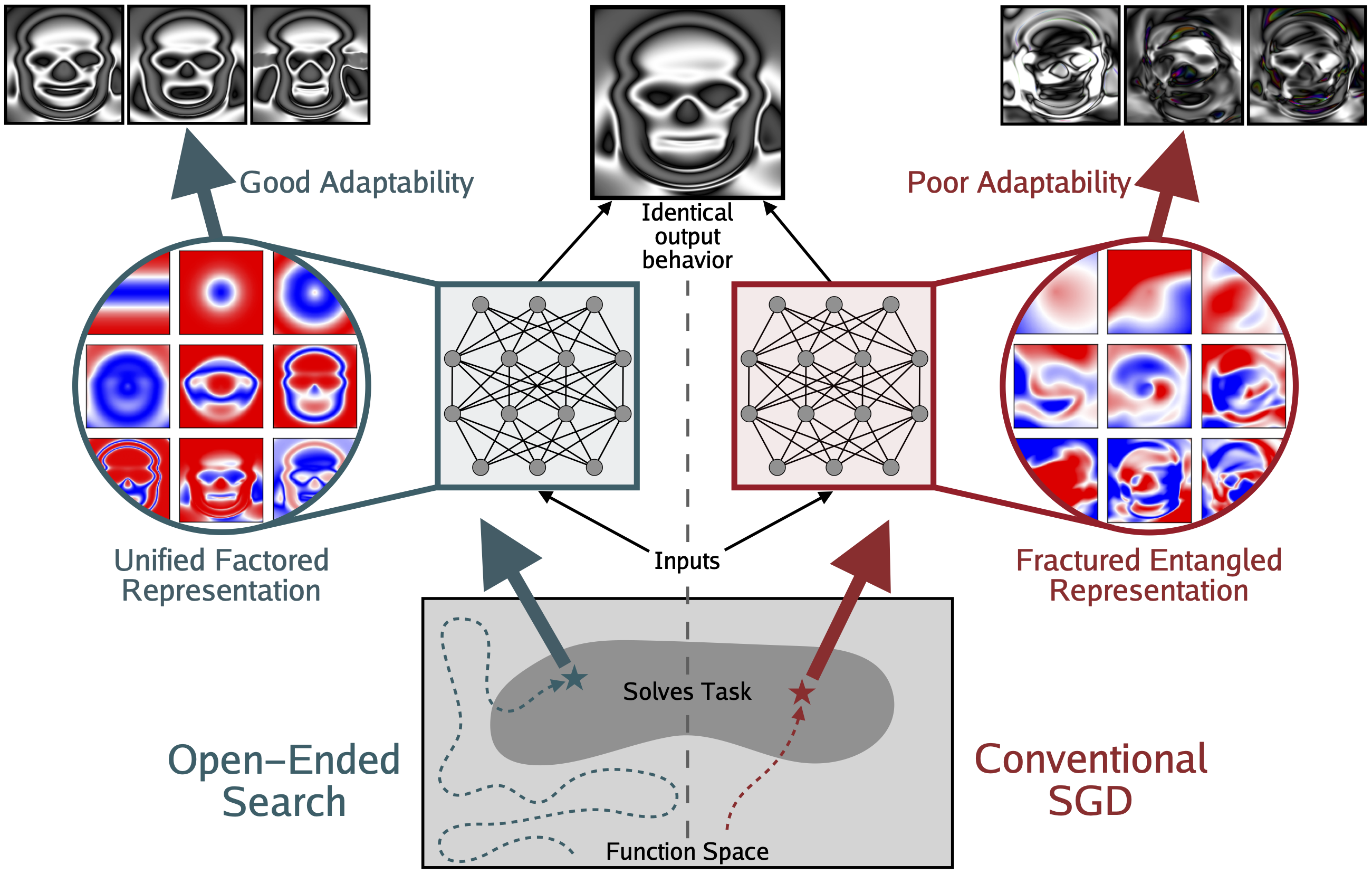

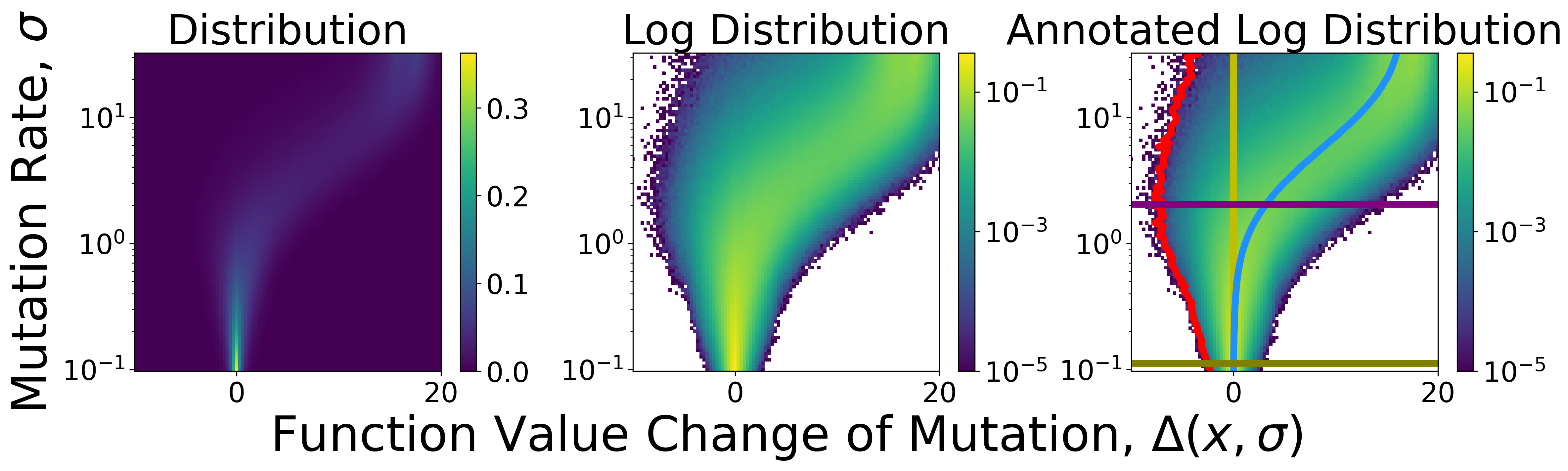

(FER) Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis

arXiv 2025

TLDR: Modern deep learning produces good output behavior, but spaghetti-like internal representations. Picbreeder is an example of an open-ended evolutionary algorithm which produces networks with qualitatively more organized internal representations.

[Arxiv] [Code] [TLDR] [MLST Teaser] [MLST Podcast]

(ASAL) Automating the Search for Artificial Life with Foundation Models

ALife Journal 2025 (Best Oral at ALIFE 2025)

TLDR: ASAL is a new ALife paradigm using VLMs to search for (1) target, (2) open-ended, and (3) diverse simulations. ASAL helps us understand "life as it could be" in arbitrary artificial worlds.

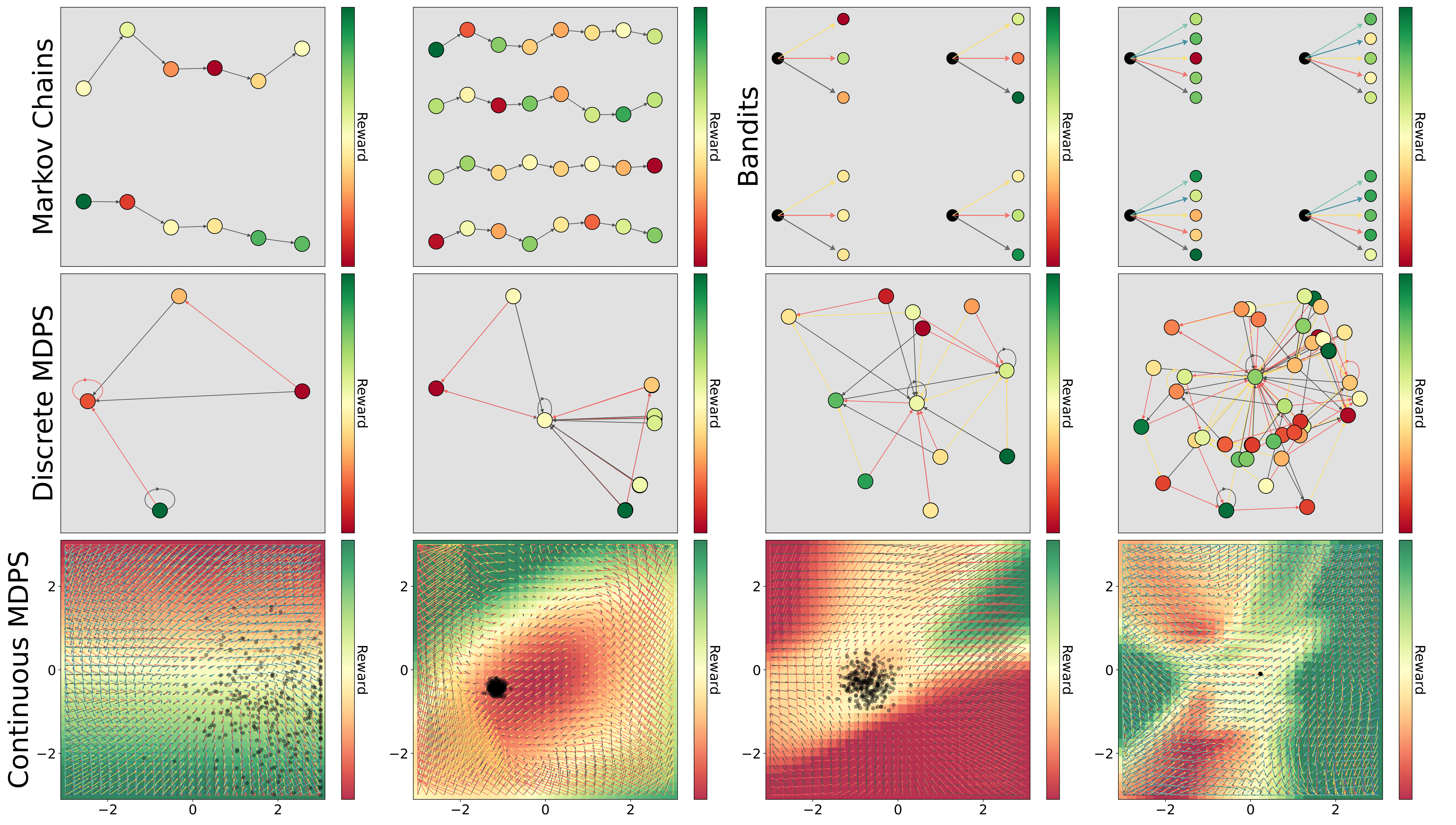

Learning In-Context Decision Making with Synthetic MDPs

AutoRL @ ICML 2024

TLDR: Generalist in-context RL agents trained on only synthetic MDPs generalize to real MDPs.

[Paper]

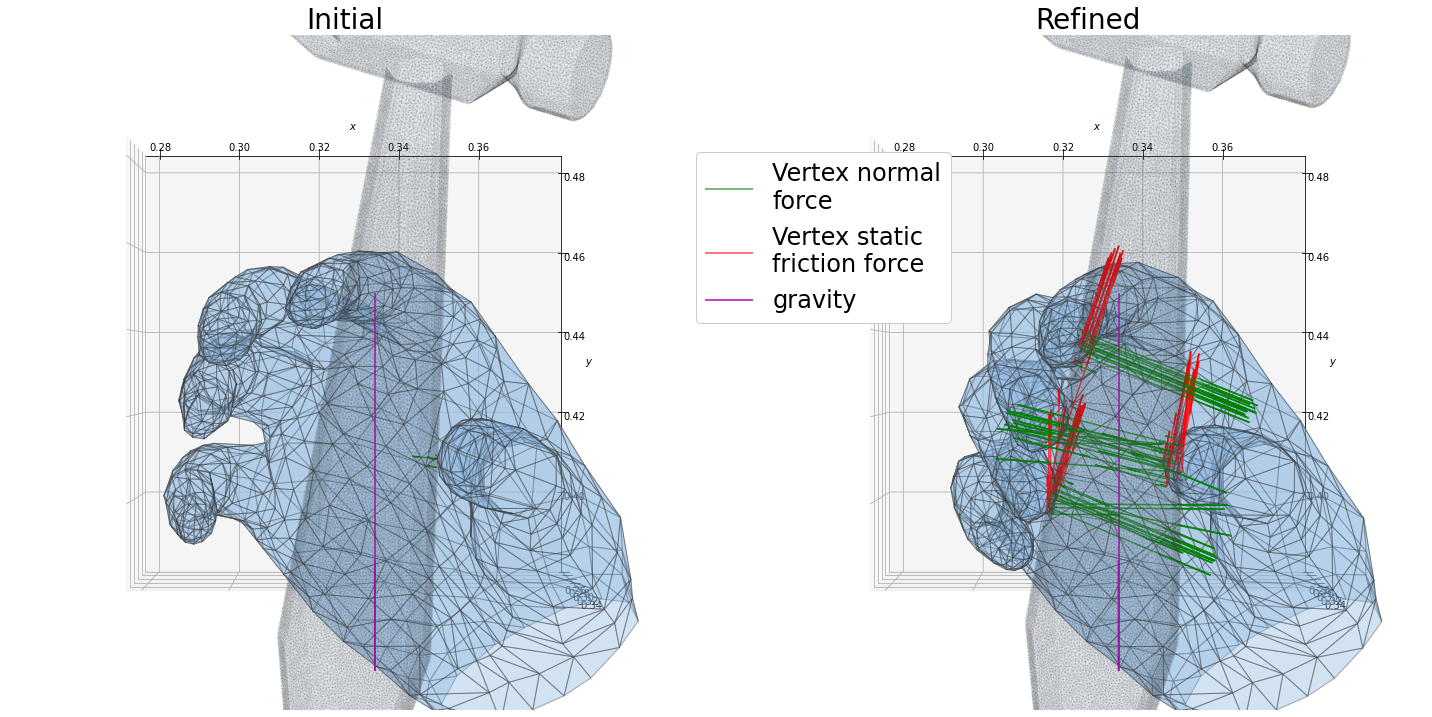

Physically Plausible Pose Refinement using Fully Differentiable Forces

EPIC @ CVPR 2021

TLDR: Accurately refining pose estimations by differentiably modeling the physics of the scene.

[Arxiv] [Presentation]

Talks

Towards a Platonic Intelligence with Unified Factored Representations

Michael Levin's Platonic Space Symposium, November 2025

[Video]

Emergence and Complexity in Artificial Life

Machine Learning Street Talk, October 2025

[Video (Coming Soon)]

FER, Imposter Intelligence, and What's Next

Machine Learning Street Talk, June 2025

[Teaser Video]

[Podcast Video]

The Fractured Entangled Representation Hypothesis

Sakana AI, August 2025

Automating the Search for Artificial Life with Foundation Models

ALIFE Oral Presentation, October 2025

[Video (Coming Soon)]

Automating the Search for Artificial Life with Foundation Models

Speaker at Detection and Emergence of Complexity Conference, May 2025

[Video]

[DEMECO Website]

Automating the Search for Artificial Life with Foundation Models

MIT Embodied Intelligence Seminar, March 2025

[Video]

[Seminar Website]

Automating the Search for Artificial Life with Foundation Models

Kaiming He's Lab, March 2025

Automating the Search for Artificial Life with Foundation Models

Michael Levin's Lab, April 2025

[Video]

Automating the Search for Artificial Life with Foundation Models

Jeff Gore's Lab, March 2025

Automating the Search for Artificial Life with Foundation Models

Harvard Emergence Journal Club, December 2025

Learning In-Context Decision Making with Synthetic MDPs

AutoRL @ ICML 2024, May 2024

[Video]